Why Privacy and Security Should Be at the Core of Every AI Initiative

AI is no longer a futuristic concept—it’s embedded in the tools we use every day. From intelligent chatbots to automated analytics and predictive systems, AI has become integral to digital transformation across industries.

But as AI becomes smarter, so does the complexity of managing its risks, especially when it comes to data privacy and security. AI systems thrive on data, but that same data can also expose organizations to legal, ethical, and operational vulnerabilities if not handled with care.

This post explores the critical privacy and security considerations organizations need to address when deploying AI technologies. Whether you’re building internal tools or integrating third-party platforms, understanding these risks is vital for maintaining trust, compliance, and business resilience.

Why Privacy and Security Matter More Than Ever

The strength of AI lies in its ability to learn from large volumes of data. That data often includes personally identifiable information (PII), behavioral insights, financial records, or proprietary business data. Mismanagement or misuse—intentional or otherwise—can lead to:

• Regulatory penalties (e.g., non-compliance with GDPR, HIPAA, etc.)

• Reputational damage and customer mistrust

• Operational disruption from data breaches

• Legal liability due to discriminatory or unethical algorithmic behavior

As AI adoption grows, so does scrutiny from regulators, consumers, and security professionals. Proactive risk management isn’t just a best practice—it’s a business imperative.

Key Privacy Considerations in AI Deployments

1. Data Minimization & Purpose Limitation

AI systems often rely on large data sets, but that doesn’t mean you should collect everything available. Organizations must:

• Collect only data that is relevant and necessary for the AI’s function.

• Clearly define the purpose of data use and stick to it.

• Avoid function creep—where data is repurposed without consent.

By limiting scope, you reduce exposure and simplify compliance efforts.

2. Informed Consent & Transparency

Users should know how their data is being used. In AI systems, where decision-making can feel opaque, it’s crucial to:

• Provide clear, accessible explanations of how data powers AI tools.

• Offer opt-ins, not opt-outs, for sensitive data processing.

• Enable users to revoke consent or request data deletion where applicable.

Transparency builds trust and aligns with ethical AI principles.

3. Bias, Fairness & Ethical Use

AI models can inherit or even amplify human bias present in training data. This leads to:

• Discrimination in hiring, lending, or healthcare outcomes

• Unequal access to services or misinformation

• Regulatory scrutiny and public backlash

To mitigate this:

• Audit training data for diversity and bias

• Use explainable AI techniques to make decision-making more interpretable

• Involve interdisciplinary teams (e.g., ethicists, sociologists) in AI design

Security Challenges of AI Systems

1. Data Breaches & Unauthorized Access

AI models often centralize valuable datasets, making them prime targets for cyberattacks. Risks include:

• Model inversion attacks, where sensitive training data is reconstructed

• API vulnerabilities in AI-as-a-Service platforms

• Weak encryption protocols during data transmission

Solutions include:

• End-to-end encryption of data pipelines

• Role-based access control (RBAC) for model access

• Regular penetration testing and code audits

2. Adversarial Attacks

AI models, especially in computer vision and NLP, can be fooled by subtle manipulations—called adversarial examples. For instance, a few pixel changes in an image might mislead a model to misclassify a stop sign.

Mitigation strategies include:

• Adversarial training (exposing models to manipulated inputs during development)

• Ongoing model monitoring and patching

• Using AI threat detection tools to flag anomalous behaviors

3. Shadow AI & Unmonitored Deployments

Not all AI is deployed through official channels. Shadow AI refers to unauthorized or unvetted tools introduced by departments or individuals.

These tools may:

• Lack of encryption or privacy safeguards

• Bypass organizational compliance frameworks

• Store data in unknown third-party clouds

Establishing internal governance, approval workflows, and clear policies helps mitigate this hidden risk.

Building Privacy-First AI: A Holistic Approach

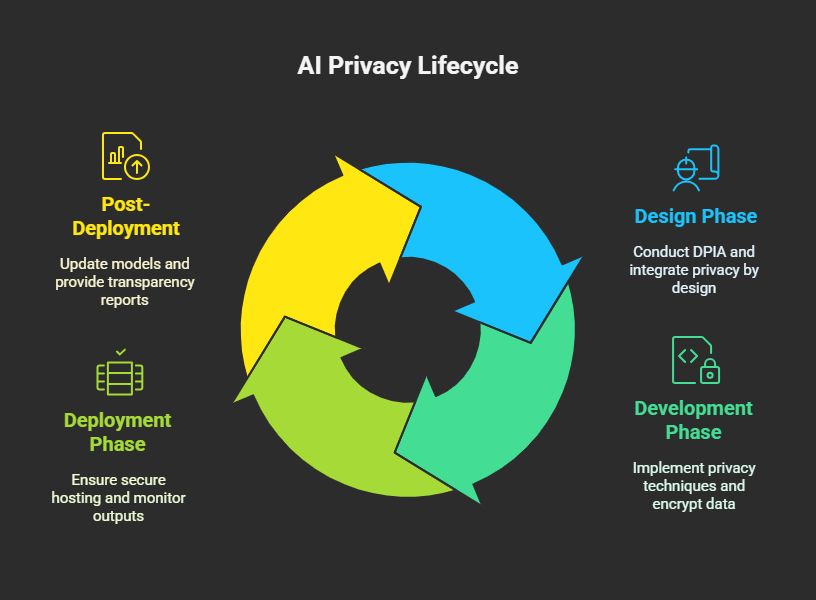

Data privacy and security cannot be afterthoughts in AI deployment—they must be embedded throughout the lifecycle.

Here’s a blueprint:

Design Phase

• Conduct a Data Protection Impact Assessment (DPIA)

• Integrate privacy by design (minimize data, anonymize inputs)

• Evaluate vendor security practices if using third-party AI

Development Phase

• Implement differential privacy or federated learning

• Encrypt training and testing datasets

• Use version control for datasets and models

Deployment Phase

• Ensure secure hosting (e.g., air-gapped environments, cloud security best practices)

• Monitor model outputs for drift, bias, or anomalies

• Enable audit trails and logging for every inference request

Post-Deployment

• Regularly update models to patch vulnerabilities

• Schedule re-evaluations for data collection and consent

• Provide transparency reports to stakeholders and regulators

Industries Where It Matters Most

While every sector should prioritize AI security, it’s mission-critical in:

• Healthcare: Patient data privacy and HIPAA compliance

• Finance: Risk modeling, anti-fraud algorithms, and KYC data

• Education: Student privacy, predictive learning analytics

• Retail: Behavioral data collection and recommendation engines

Even creative fields using generative AI (e.g., in content or design) must ensure intellectual property and user data are handled ethically.

Privacy & Security Are Competitive Advantages

In today’s trust economy, privacy and security are more than compliance checkboxes—they’re brand differentiators.

Customers, employees, and partners are more likely to engage with organizations that:

• Explain how AI is used

• Demonstrate data accountability

• Commit to fairness, transparency, and protection

By taking a privacy-first approach to AI, you don’t just reduce risk—you build confidence and long-term value.

Final Thoughts

AI has immense potential to transform how we work, learn, and interact. But with that power comes responsibility.

Organizations must balance innovation with privacy, scalability with security, and automation with ethics. Whether you’re building AI from the ground up or integrating off-the-shelf platforms, privacy-conscious design and secure engineering practices must be central to your strategy.

Looking ahead, regulations will tighten, user awareness will grow, and security threats will evolve. But with a thoughtful, proactive approach, your organization can deploy AI confidently—without compromising on trust.

Because smart technology should always be built on a foundation of safe, responsible practices.

Ready to Build Secure, Privacy-First AI Systems?

Stay ahead of threats with AI solutions designed for safety and scalability.

Click Here